Voice MCP

Enables voice interactions with Claude and other LLMs using an OpenAI API key for STT/TTS services.

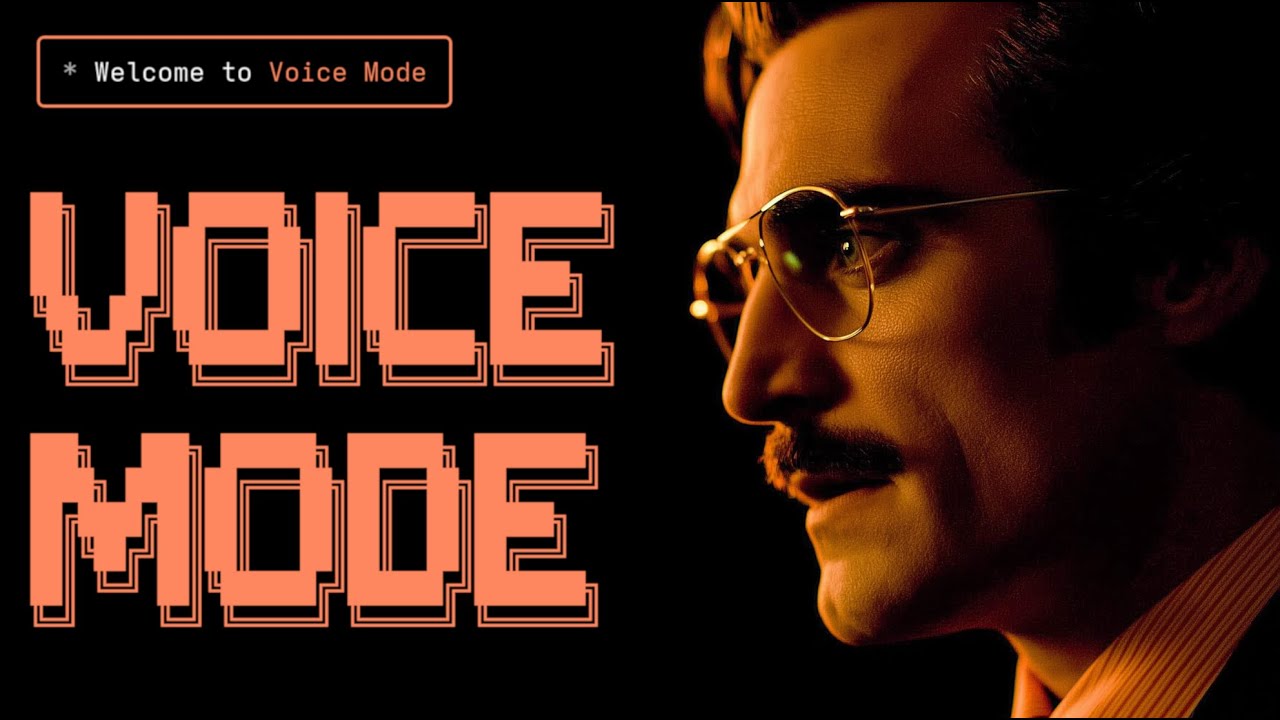

VoiceMode

Natural voice conversations with Claude Code (and other MCP capable agents)

VoiceMode enables natural voice conversations with Claude Code. Voice isn't about replacing typing - it's about being available when typing isn't.

Perfect for:

- Walking to your next meeting

- Cooking while debugging

- Giving your eyes a break after hours of screen time

- Holding a coffee (or a dog)

- Any moment when your hands or eyes are busy

See It In Action

Quick Start

Requirements: Computer with microphone and speakers

Option 1: Claude Code Plugin (Recommended)

The fastest way for Claude Code users to get started:

# Add the VoiceMode marketplace

claude plugin marketplace add mbailey/voicemode

# Install VoiceMode plugin

claude plugin install voicemode@voicemode

## Install dependencies (CLI, Local Voice Services)

/voicemode:install

# Start talking!

/voicemode:converse

Option 2: Python installer package

Installs dependencies and the VoiceMode Python package.

# Install UV package manager (if needed)

curl -LsSf https://astral.sh/uv/install.sh | sh

# Run the installer (sets up dependencies and local voice services)

uvx voice-mode-install

# Add to Claude Code

claude mcp add --scope user voicemode -- uvx --refresh voice-mode

# Optional: Add OpenAI API key as fallback for local services

export OPENAI_API_KEY=your-openai-key

# Start a conversation

claude converse

For manual setup, see the Getting Started Guide.

Features

- Natural conversations - speak naturally, hear responses immediately

- Works offline - optional local voice services (Whisper STT, Kokoro TTS)

- Low latency - fast enough to feel like a real conversation

- Smart silence detection - stops recording when you stop speaking

- Privacy options - run entirely locally or use cloud services

Compatibility

Platforms: Linux, macOS, Windows (WSL), NixOS Python: 3.10-3.14

Configuration

VoiceMode works out of the box. For customization:

# Set OpenAI API key (if using cloud services)

export OPENAI_API_KEY="your-key"

# Or configure via file

voicemode config edit

See the Configuration Guide for all options.

Permissions Setup (Optional)

To use VoiceMode without permission prompts, add to ~/.claude/settings.json:

{

"permissions": {

"allow": [

"mcp__voicemode__converse",

"mcp__voicemode__service"

]

}

}

See the Permissions Guide for more options.

Local Voice Services

For privacy or offline use, install local speech services:

- Whisper.cpp - Local speech-to-text

- Kokoro - Local text-to-speech with multiple voices

These provide the same API as OpenAI, so VoiceMode switches seamlessly between them.

Installation Details

Ubuntu/Debian

sudo apt update

sudo apt install -y ffmpeg gcc libasound2-dev libasound2-plugins libportaudio2 portaudio19-dev pulseaudio pulseaudio-utils python3-dev

WSL2 users: The pulseaudio packages above are required for microphone access.

Fedora/RHEL

sudo dnf install alsa-lib-devel ffmpeg gcc portaudio portaudio-devel python3-devel

macOS

brew install ffmpeg node portaudio

NixOS

# Use development shell

nix develop github:mbailey/voicemode

# Or install system-wide

nix profile install github:mbailey/voicemode

From source

git clone https://github.com/mbailey/voicemode.git

cd voicemode

uv tool install -e .

NixOS system-wide

# In /etc/nixos/configuration.nix

environment.systemPackages = [

(builtins.getFlake "github:mbailey/voicemode").packages.${pkgs.system}.default

];

Troubleshooting

| Problem | Solution |

|---|---|

| No microphone access | Check terminal/app permissions. WSL2 needs pulseaudio packages. |

| UV not found | Run curl -LsSf https://astral.sh/uv/install.sh | sh |

| OpenAI API error | Verify OPENAI_API_KEY is set correctly |

| No audio output | Check system audio settings and available devices |

Save Audio for Debugging

export VOICEMODE_SAVE_AUDIO=true

# Files saved to ~/.voicemode/audio/YYYY/MM/

Documentation

- Getting Started - Full setup guide

- Configuration - All environment variables

- Whisper Setup - Local speech-to-text

- Kokoro Setup - Local text-to-speech

- Development Setup - Contributing guide

Full documentation: voice-mode.readthedocs.io

Links

- Website: getvoicemode.com

- GitHub: github.com/mbailey/voicemode

- PyPI: pypi.org/project/voice-mode

- YouTube: @getvoicemode

- Twitter/X: @getvoicemode

- Newsletter:

License

MIT - A Failmode Project

mcp-name: com.failmode/voicemode

Related Servers

MCP Notify

Monitor the Model Context Protocol (MCP) Registry for new, updated, and removed servers. Get real-time notifications via Discord, Slack, Email, Telegram, Microsoft Teams, Webhooks, or RSS feeds. Includes CLI, Go SDK, REST API, and MCP server for AI assistants.

Zulip Chat

An MCP server for integrating with the Zulip team chat platform.

x402mail

Send and receive emails via Python SDK or MCP. No API keys, no accounts - your wallet is your identity. Pay per call with USDC on Base via the x402 protocol. $0.005 per email.

Sinch

Interact with Sinch APIs for communication services like conversation, email, verification, and voice.

Discord Webhook

Post messages to Discord webhooks.

Zoom MCP Server

Schedule and manage Zoom meetings with AI assistance. Requires Zoom API credentials for configuration.

MCP DingDing Bot

Send and manage message notifications and interactions with DingTalk / DingDing.

MailerSend MCP Server

Turn an AI tool into your smart email engine

gotoolkits/wecombot

An MCP server application that sends various types of messages to the WeCom group robot.

Bluesky

Post to the Bluesky social network using the AT Protocol.