dbt

Official MCP server for dbt (data build tool) providing integration with dbt Core/Cloud CLI, project metadata discovery, model information, and semantic layer querying capabilities.

dbt MCP Server

This MCP (Model Context Protocol) server provides various tools to interact with dbt. You can use this MCP server to provide AI agents with context of your project in dbt Core, dbt Fusion, and dbt Platform.

Read our documentation here to learn more. This blog post provides more details for what is possible with the dbt MCP server.

Experimental MCP Bundle

We publish an experimental Model Context Protocol Bundle (dbt-mcp.mcpb) with each release so that MCPB-aware clients can import this server without additional setup. Download the bundle from the latest release assets and follow Anthropic's mcpb CLI docs to install or inspect it.

Feedback

If you have comments or questions, create a GitHub Issue or join us in the community Slack in the #tools-dbt-mcp channel.

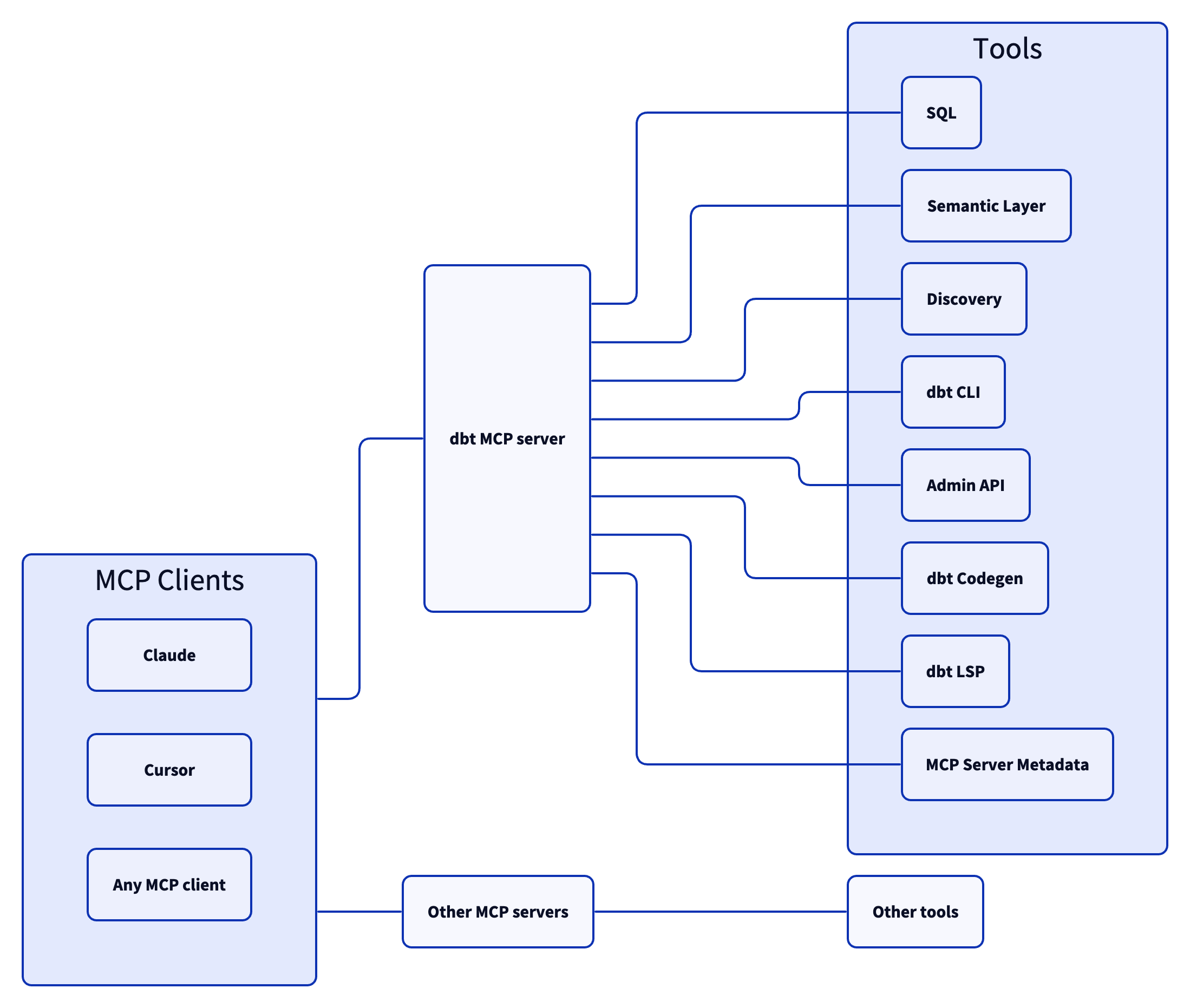

Architecture

The dbt MCP server architecture allows for your agent to connect to a variety of tools.

Tools

SQL

execute_sqltext_to_sql

Semantic Layer

get_dimensionsget_entitiesget_metrics_compiled_sqllist_metricslist_saved_queriesquery_metrics

Discovery

get_all_macrosget_all_modelsget_all_sourcesget_exposure_detailsget_exposuresget_lineageget_macro_detailsget_mart_modelsget_model_childrenget_model_detailsget_model_healthget_model_parentsget_model_performanceget_related_modelsget_seed_detailsget_semantic_model_detailsget_snapshot_detailsget_source_detailsget_test_detailssearch

dbt CLI

buildcompiledocsget_lineage_devget_node_details_devlistparserunshowtest

Admin API

cancel_job_runget_job_detailsget_job_run_artifactget_job_run_detailsget_job_run_errorget_project_detailslist_job_run_artifactslist_jobslist_jobs_runsretry_job_runtrigger_job_run

dbt Codegen

generate_model_yamlgenerate_sourcegenerate_staging_model

dbt LSP

fusion.compile_sqlfusion.get_column_lineageget_column_lineage

MCP Server Metadata

get_mcp_server_version

Examples

Commonly, you will connect the dbt MCP server to an agent product like Claude or Cursor. However, if you are interested in creating your own agent, check out the examples directory for how to get started.

Contributing

Read CONTRIBUTING.md for instructions on how to get involved!

Related Servers

Fireproof JSON DB Collection Server

Manage multiple Fireproof JSON document databases with cloud sync capabilities.

MariaDB / MySQL

Provides access to MariaDB and MySQL databases for querying and data manipulation.

Solana Launchpads MCP

Tracks daily activity and graduate metrics across multiple Solana launchpads using the Dune Analytics API.

Teradata

A collection of tools for managing the platform, addressing data quality and reading and writing to Teradata Database.

Cvent MCP Server by CData

A read-only MCP server for Cvent, enabling LLMs to query live Cvent data using the CData JDBC Driver.

Hive MCP Server

Enables AI assistants to interact with the Hive blockchain through the Model Context Protocol.

Wormhole Metrics MCP

Analyzes cross-chain activity on the Wormhole protocol, providing insights into transaction volumes, top assets, and key performance indicators.

IGDB MCP Server

Access the IGDB (Internet Game Database) API through Model Context Protocol (MCP)

Avro MCP Server by CData

A read-only MCP server for Avro data sources, powered by the CData JDBC Driver.

Tigris Data

A serverless NoSQL database and search platform.